Overview

We present a novel in-situ dataset for animal behavior recognition from drone videos. The dataset, curated from videos taken of Kenyan wildlife, currently contains behaviors of giraffes, plains zebras, and Grevy’s zebras, and will soon be expanded to other species, including baboons. The videos were collected by flying drones over animals at the Mpala Research Centre in Kenya in January 2023. The dataset consists of more than 10 hours of extracted videos, each centered on a particular animal and annotated with seven types of behaviors along with an additional category for occluded views. Ten non-experts contributed annotations, overseen by an expert in animal behavior who developed a standardized set of criteria to ensure consistency and accuracy across the annotations. The drone videos were taken using the permission of Research License No. NACOSTI/P/22/18214, following a protocol that strictly adheres to guidelines set forth by the Institutional Animal Care and Use Committee under permission No. IACUC 1835F. This dataset will be a valuable resource for experts in both machine learning and animal behavior:

-

It provides challenging data for the development of new machine-learning algorithms for animal behavior recognition. It complements recently released, larger datasets that used videos scraped from online sources because it was gathered in-situ and therefore is more representative of how behavior recognition algorithms may be used in practice.

-

It demonstrates the effectiveness of a new animal behavior curation pipeline for videos collected in-situ using drones.

-

It provides a test set for evaluating the impact of a change in fieldwork protocols by research scientists studying animal behavior to the use of drones and recorded videos.

We provide a detailed description of the dataset and its annotation process, along with some initial experiments on the dataset using conventional deep learning models. The results demonstrate the effectiveness of the dataset for animal behavior recognition and highlight the potential for further research in this area.

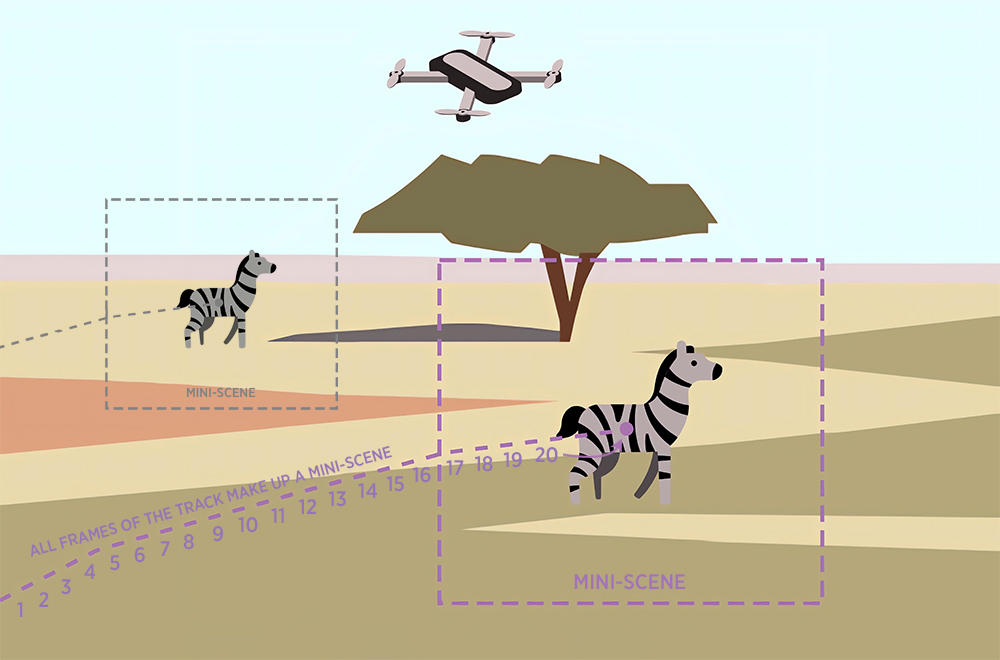

Mini-Scenes

Our approach to curating animal behaviors from drone videos is a method that we refer to as mini-scenes extraction. We use object detection and tracking methods to simulate centering the camera’s field of view on each individual animal and zooming in on the animal and its immediate surroundings. This compensates for drone and animal movements and provides a focused context for categorizing individual animal behavior. The study of social interactions and group behaviors, while not the subject of the current work, may naturally be based on combinations of miniscenes.

Extraction

To implement our mini-scenes approach, we utilize YOLOv8 object detection algorithm to detect the animals in each frame and an improved version of the SORT tracking algorithm. We then extract a small window around each animal in the frame of a resulting track to create a single mini-scene.

Examples of mini-scenes extraction are available on YouTube:

Examples of mini-scenes extraction are available on YouTube: Giraffes, Zebras.

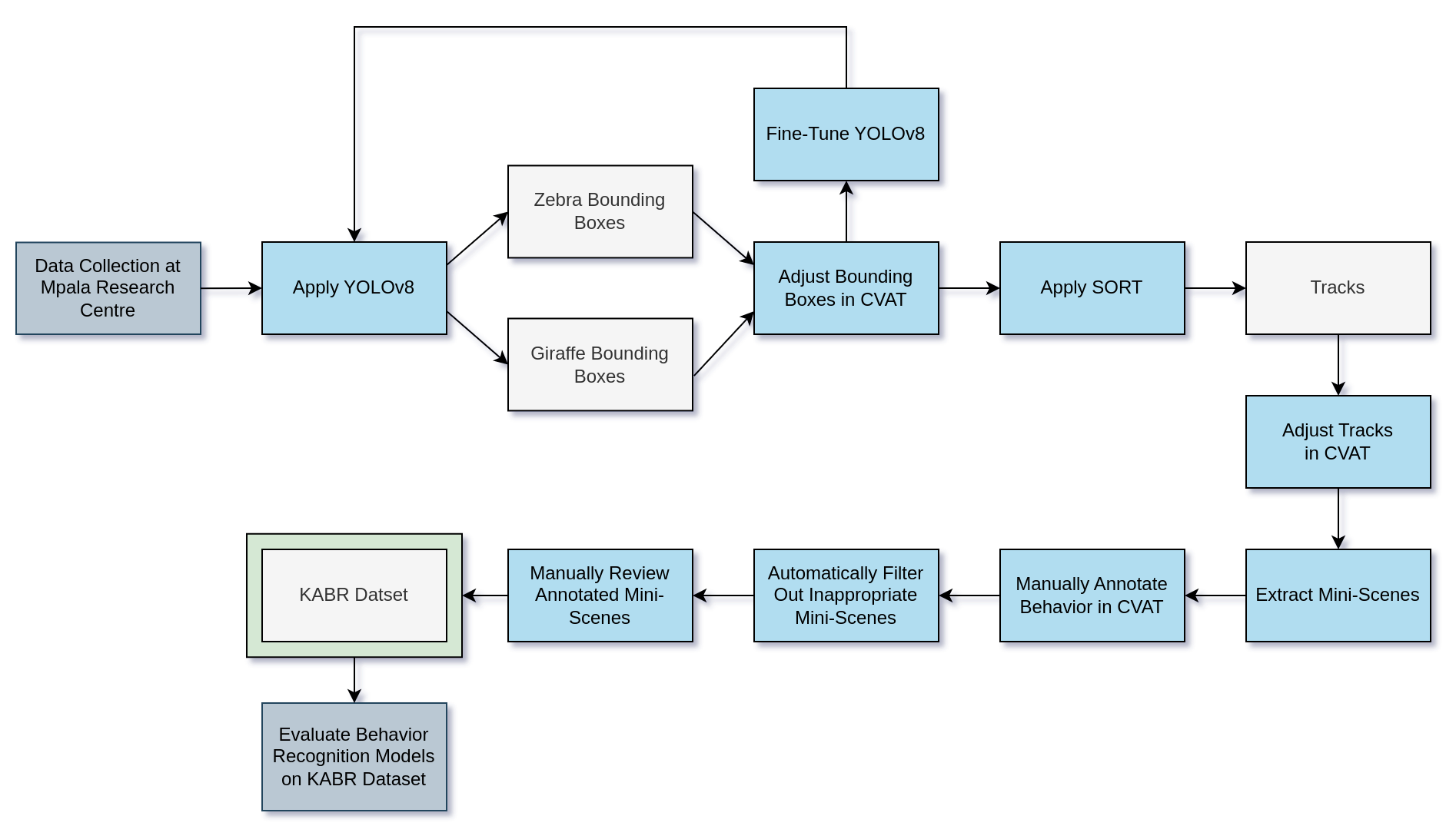

Data Collection and Processing

The drone videos were collected by our team at Mpala Research Centre, Kenya in January 2023. Animal behaviors of giraffes, plains zebras, and Grevy’s zebras were recorded using DJI Mavic 2S drones in 5.4K resolution.

We developed kabr-tools to create a layer between animal detection and manual correction of detected coordinates. These tools enable the identification of any inaccuracies in the automated process and provide a way to correct them in a timely and efficient manner. We also developed an interpolation tool that fills in any missed detections within a track, thereby increasing the overall tracking quality. This tool uses an algorithm that estimates the animal’s location based on its previous movements, helping to fill in gaps where the automated detection may have failed. The complete data processing pipeline for the KABR dataset annotation is shown below:

Our team heavily utilized CVAT to manually adjust bounding boxes detected by YOLOv8, merge tracks produced by improved SORT, and manually annotate behavior for extracted mini-scenes.

Examples

The dataset includes a total of eight categories that describe various animal behaviors. These categories are Walk, Graze, Browse, Head Up, Auto-Groom, Trot, Run, and Occluded.

| Walk |  |

|

|

|---|---|---|---|

| Graze |  |

|

|

| Browse |  |

|

|

| Head Up |  |

|

|

| Auto-Groom |  |

|

|

| Trot |  |

|

|

| Run |  |

|

|

| Occluded |  |

|

|

Experiments

We evaluate I3D, SlowFast, and X3D models on our dataset and report Top-1 accuracy for all species, giraffes, plain zebras, and Grevy’s zebras.

| Method | All | Giraffes | Plains Zebras | Grevy’s Zebras |

|---|---|---|---|---|

| I3D (16x5) | 53.41 | 61.82 | 58.75 | 46.73 |

| SlowFast (16x5, 4x5) |

52.92 | 61.15 | 60.60 | 47.42 |

| X3D (16x5) | 61.9 | 65.1 | 63.11 | 51.16 |

Extended evaluation is available here.

Demo

Here you can see an example of the performance of the X3D model on unseen data.

Visualization

By analyzing the gradient information flowing into the final convolutional layers of the network, Grad-CAM generates a heat map that highlights the regions of the image that contribute most significantly to the network’s decision. This demonstrates here that the neural network typically prioritizes the animal in the center of the frame. Interestingly, for the Run behavior, because the animal remains in the center of the mini-scene more of the background is used to classify the movement. Also, for the Occluded category, where the animal is partially or completely hidden within the frame, the network shifts its attention to focus on other objects present.

|

|

|

|

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Format

The KABR dataset follows the Charades format:

KABR

/images

/video_1

/image_1.jpg

/image_2.jpg

...

/image_n.jpg

/video_2

/image_1.jpg

/image_2.jpg

...

/image_n.jpg

...

/video_n

/image_1.jpg

/image_2.jpg

/image_3.jpg

...

/image_n.jpg

/annotation

/classes.json

/train.csv

/val.csv

The dataset can be directly loaded and processed by the SlowFast framework.

Naming

G0XXX.X - Giraffes ZP0XXX.X - Plains Zebras ZG0XXX.X - Grevy's Zebras

Information

KABR/configs: examples of SlowFast framework configs.

KABR/annotation/distribution.xlsx: distribution of classes for all videos.

Scripts

We provide image2video.py and image2visual.py scripts to facilitate exploratory data analysis.

image2video.py: Encode image sequences into the original video.

For example, [image/G0067.1, image/G0067.2, ..., image/G0067.24] will be encoded into video/G0067.mp4.

image2visual.py: Encode image sequences into the original video

with corresponding annotations.

For example, [image/G0067.1, image/G0067.2, ..., image/G0067.24] will be encoded into visual/G0067.mp4.

Acknowledgments

This material is based upon work supported by the National Science Foundation under Award No. 2118240 and Award No. 2112606 (AI Institute for Intelligent Cyberinfrastructure with Computational Learning in the Environment (ICICLE)). Any opinions, findings, conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Citation

KABR: In-Situ Dataset for Kenyan Animal Behavior Recognition from Drone Videos

@inproceedings{kholiavchenko2024kabr,

title={KABR: In-Situ Dataset for Kenyan Animal Behavior Recognition from Drone Videos},

author={Kholiavchenko, Maksim and Kline, Jenna and Ramirez, Michelle and Stevens, Sam and Sheets, Alec and Babu, Reshma and Banerji, Namrata and Campolongo, Elizabeth and Thompson, Matthew and Van Tiel, Nina and Miliko, Jackson and Bessa, Eduardo and Duporge, Isla and Berger-Wolf, Tanya and Rubenstein, Daniel and Stewart, Charles},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision},

pages={31-40},

year={2024}

}

Deep Dive into KABR: A Dataset for Understanding Ungulate Behavior from In-Situ Drone Video

@article{kholiavchenko2024deep,

title={Deep dive into KABR: a dataset for understanding ungulate behavior from in-situ drone video},

author={Kholiavchenko, Maksim and Kline, Jenna and Kukushkin, Maksim and Brookes, Otto and Stevens, Sam and Duporge, Isla and Sheets, Alec and Babu, Reshma R and Banerji, Namrata and Campolongo, Elizabeth and others},

journal={Multimedia Tools and Applications},

pages={1--20},

year={2024},

publisher={Springer}

}

KABR: In-Situ Dataset for Kenyan Animal Behavior Recognition from Drone Videos

KABR: In-Situ Dataset for Kenyan Animal Behavior Recognition from Drone Videos Download KABR from Google Drive

Download KABR from Google Drive

Paper #1

Paper #1